Keyword [DGA]

Yang S, Li G, Yu Y. Dynamic Graph Attention for Referring Expression Comprehension[J]. arXiv preprint arXiv:1909.08164, 2019.

1. Overview

1.1. Motivation

- existing methods treat the objects in isolation or only explore the direct relationships between objects without being aligned with the expression

In this paper, it proposes Dynamic Graph Attention Network (DGA)

1) muti-step reasoning

2) differential analyzer module

3) static graph attention module

4) dynamic graph attention module

5) matching module

1.2. Dataset

- RefCOCO

- RefCOCO+

- RefCOCOg

2. DGA

2.1. Reasoning Structure Analyzer

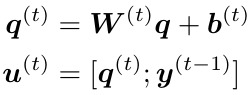

Output the weight of each word $r_l^{(t)}$ at each time step $t$.

$q$. expression feature

2.2. Static Graph Attention Module

Output:

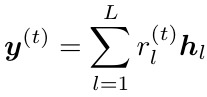

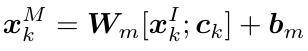

1) $α_{k,l}$. weight between node $k$ and word $l$

2) $c_k$. expression feature for node $k$

3) $β_{n,l}$. weight between edge $n$ and word $l$

1) edge $e_{ij}$ = [0, 1, …, 11]

0=’no relation’; 1=’inside’; …; 11=’bottom right’

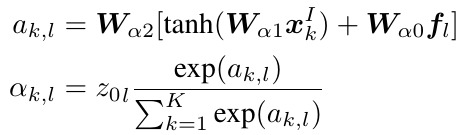

2) $x_k^I = [x_k^o; p_k]$. node of $G_I$. visual feature + spatial feature

3) node of $G_M$. node of $G_I$ + $c_k$

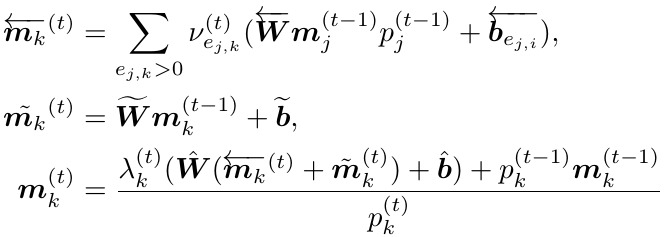

2.3. Dynamic Graph Attention Module

Output the feature $m_k^{(t)}$ of node $k$ at each time step $t$.

1) update $α{k,l}$ and $β{n,l}$ based on $r_l^{(t)}$

2) update node feature $m_k^{(t)}$ based on $m_k^{(t-1)}$ and other connected node feature $m_j^{(t-1)}$

2.4. Matching Module

Based on final update node feature $m_k^{(T)}$ and expression feature $q$

3. Experiments